Positive Security Model: Next-Gen Alternative to Detect-And-Respond Technology (Virsec Blog)

Written by Satya Gupta, Founder & CTO, Virsec

Despite the increasing billions being poured into cybersecurity every passing year, instead of closing the gap with bad actors, we seem to be regressing. Not a day goes by when we hear about yet another company being attacked and having had to pay a ridiculous sum as ransom. The current crop of cyber security tools is just unable to keep up. Bad actors have found the number for our existing cyber defenses and can call them out at will.

Very likely, there is no easy button for this conundrum. Still, this is certain – bad actors can routinely exploit systemic vulnerabilities in even our arsenal’s best-of-breed cyber security solutions. Errors in code are inevitable, but delivering products with systemic (or catastrophic) vulnerabilities that bad actors can exploit at will is quite another matter.

Systemic Vulnerability #1: Algorithmic Flaws

Cyber solutions that leverage the detect-and-respond (XDR) approach are built on the premise that the attacker will leverage only the “cataloged” behavior patterns during the attack. One slight deviation is enough to throw the XDR off its game.

One algorithmic flaw in an XDR revolves around the assumption an algorithm may make regarding a quantity-based threshold. For example, the algorithm may consider two files getting encrypted in a minute to be normal behavior, but what if 15 files were to get encrypted in a minute? The XDR algorithm may conclude that an attack is in progress. There is a heuristic threshold involved here. The attacker can slow their attack down to float under the radar of this threshold.

Another algorithmic flaw in the XDR involves the ability to determine if a file is encrypted or not. The typical XDR assumes that the attacker will encrypt the entire file in one fell swoop. Many XDRs leverage an established statistical artifact from the world of cryptography called the Chi-Square (or functionally similar) coefficient, as described here. This coefficient is an approximate or heuristic measure used to “establish” whether a file has been encrypted. This coefficient measures a file’s ratio of plain text data vs. non-plain text data. An unencrypted text file will have a very high Chi-Square coefficient, whereas an encrypted file’s Chi-Square coefficient will be very low. Once again, the attacker can encrypt only some chunks of data in the file so that the Chi-Square coefficient returns a sufficiently high value. In that case, the attacker can continue to float under this radar.

Instead of using the Chi-Square coefficient discussed above, other security tools may use equivalent coefficients such as the Shannon Entropy, Arithmetic Mean, and Monte Carlo coefficients. Inherently all coefficients use the same general principle and have heuristic thresholds.

As clever as this heuristic measure appears to be, a few major shortcomings, as below, can easily subvert the benefits of this algorithm:

- To prevent triggering a false alarm, the XDR must watch the application normally execute for a sufficiently long time to “learn” if encrypting files represents the expected behavior of the app or not. Why must the XDR wait for a sufficiently long time? Because there is no knowing if any yet unexercised functionality may also encrypt a file legitimately.

- Unfortunately, there is no clear-cut value of the encryption coefficient where the XDR can definitively conclude that a file is encrypted. Furthermore, the cut-off can be different for different types of files, such as image and data files.

- Similarly, no clear-cut number of encrypted files or time period will definitively signify if the said encryption activity is normal or not.

Bad actors didn’t take long to discover and circumvent these significant shortcomings in the typical XDR. As regards the first shortcoming, bad actors realized that when an executable is presented to the CPU for execution for the very first time, the cyber security tool is at its most vulnerable since there is no “learning” history of the legitimate behavior of that application.

To abuse this yet another “learning period” related flaw, bad actors can create hundreds of mutations of a given malware. Since the EDR learns based on checksums, this little trick resets any learning the security tool may have performed on an earlier mutation.

To abuse the second shortcoming around the encryption coefficient, attackers switched tactics. Instead of encrypting the entire contents of the targeted file, bad actors started encrypting only every other 16 bytes. They could also change the file extension to throw the Lo-and-behold malware like LockFile delivered the “success” bad actors were striving for.

To fly under the radar of the quantitative time threshold shortcoming, bad actors slowed down the number of files encrypted per minute.

The above narrative describes how bad actors outmaneuvered the XDRs algorithm for ransomware. Long story short, we could explain how the attackers played cat-and-mouse games with the XDR algorithms for other behavior.

Systemic Vulnerability #2: Learning-Related Vulnerabilities

Over the years, the latest v12 of the MITRE ATT&CK Framework has cataloged 14 tactics and 401 (sub)techniques and described procedures (TTPs) at a high level. These TTPs are best described at the highest level as a short sequence of system calls needed to complete a MITRE tactic such as Privilege Escalation or Lateral Movement. As the code being monitored emits a known TTP, the XDR bumps up its suspicion/maliciousness score depending on the nature of the emitted TTP. When the suspicion/ maliciousness score crosses an XDR-specific threshold, the XDR declares that the code being monitored is malicious.

Usually, this would be easy, but the fact that a legitimate piece of code may emit a TTP legitimately complicates the situation. Now the XDR must be able to discount those TTPs that the application issued legitimately. If the XDR does not discount such a legitimate action, then this TTP may be the one that breaks the camel’s back and tips the aggregate suspicious/maliciousness score count beyond the threshold. At that point, the XDR may terminate the code, whereas all that happened was that the code executed a perfectly legitimate action. This is the very definition of false positives. A false positive can result in business discontinuity. In conclusion, the dependence on fine-grained “learning” the application’s legitimate behavior cannot be understated.

Systemic Vulnerability #3: Threshold-based Vulnerabilities

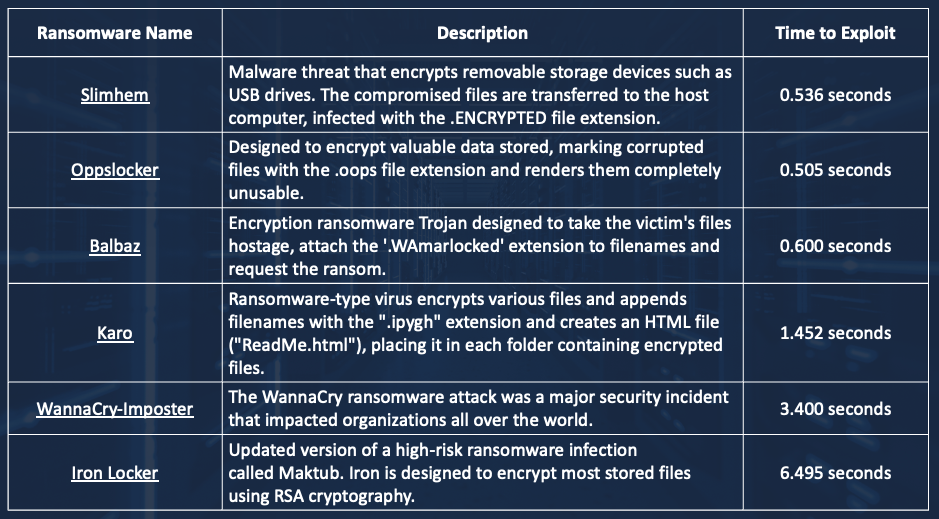

Knowing that XDRs work based on a time-based suspicion threshold, bad actors can play games such as creating multiple processes and multiple threads to distribute the malicious action around such that the suspicion score of any one process or any one thread does not exceed the threshold at which the XDR will raise the alarm. Or they can create one or more malware that completes its evil intent quickly – hence the name short fuse malware. A partial list of some recent short fuse malware that we found in the wild is shown below:

All these short-fused malware readily evaded detection by leading commercial XDR products.

Systemic Vulnerability #4: Analysis-Based Vulnerability

As I read through the referenced ‘Bleeping Computer’ article, the following sentence caught my attention, “Microsoft Security Copilot is an AI-powered security analysis tool that enables analysts to respond to threats quickly, process signals at machine speed, and assess risk exposure in minutes,” Redmond says. It became abundantly evident that since the XDR first collects and only subsequently responds to a series of TTPs that have occurred in the past, the XDR will never be able to prevent some amount of damage from occurring, even if the telemetry were to be processed by software that Elon Musk deemed to be so powerful that it could wipe out humanity. Some XDR operates locally, whereas others operate from a Cloud. The latter category is at an even more significant disadvantage because the detection telemetry needs to make its best-effort way through the network to the cloud before the containment telemetry can trudge back to the affected workload.

The gap between time to exploitation and response time is yet another systemic vulnerability in the XDR. Many proponents of XDR will gladly hire a battery of SOC Analysts to ensure that the XDR does not act on false positive information. This post-XDR analysis not only adds to the response time, which allows attackers to linger in the workload longer, but even worse, it adds OPEX.

Summarizing the Systemic Vulnerabilities of the Detect-And-Respond Security Model

We’ve previously described how EDRs suffer from the following vulnerabilities that attackers abuse successfully:

- Algorithm-based: Behavioral telemetry assumptions can be easily subverted.

- Learning-based: Without exercising the application thoroughly, it is hard to distinguish between legitimate application behavior vs. malicious behavior. Inadequate cataloging of legitimate behavior will trigger a false positive determination from the EDR.

- Threshold-based: The thresholds for suspicion scores and event count per period are based on experience. Attackers can readily fly under these radars.

- Analysis-based: Despite throwing sophisticated algorithms, the EDR cannot make decisions in time to prevent damage.

Is there any Respite from Systemic Vulnerabilities in the XDR?

An analogy, as below, may point us to mitigation.

What if I were to offer you a plane ticket to go from, say, New York to Miami and tell you that the reason the ticket from this (hypothetical) airline is relatively cheaper is that the airline can reduce costs by not performing all the safety inspections that competing airlines perform. Despite the missing safety inspections, there is nothing to worry about; the pilots leverage the best-of-breed AI/ ML tools that will respond within minutes if bad things happen as the plane flies.

Most people would likely refuse to fly this hypothetical airline; I can’t blame them for not being comfortable trusting their lives with a pilot they barely know. The consequences of the pilot failing to deliver can be catastrophic.

This analogy closely emulates the value proposition that XDRs offer when protecting code. Like with our hypothetical low-cost airlines, XDRs will let new code execute without performing any prior safety diligence with the expectation that when malicious activity occurs, the XDR will be able to detect threats in minutes. Despite throwing the best analytics technology at the problem, bad actors continue to exploit the time-based systemic vulnerability described above.

As in the case of hypothetical airlines, establishing the provenance and integrity of code upfront may be the way to break out of the various systemic vulnerability previously described. Like with the airline’s example, exercising diligence ensures malicious code doesn’t get to run. This is what Zero-Trust for applications is all about.

What advantages does the Positive Security Model offer over the Negative (Detect-And-Respond) Model?

Both models have pros and cons, as described below.

The Positive (or Zero Trust) Security Model

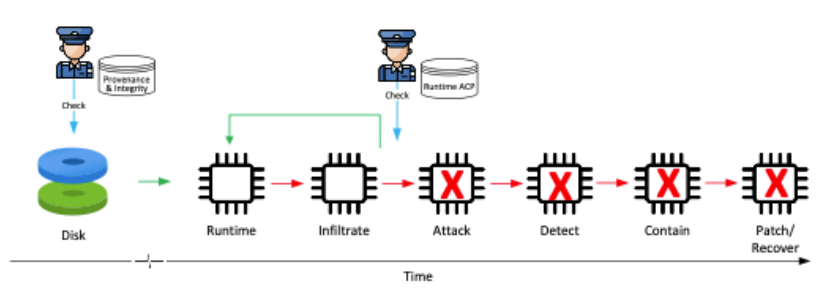

The Positive Security model protects code at two levels: code-on-disk and code-in-memory, as shown in the diagram below. In this model, when it comes to code-on-disk, any unauthorized code whose provenance and integrity cannot be established beforehand does not get to execute. Similarly, at runtime, the positive security model ensures that a vulnerable application does not convert bad actor-provided data into code and starts to run it. Please see the figure below. The Positive Security model prevents the code from getting into attack and later stages.

The significant benefits of the Positive Security Model include the following:

- Preventing supply chain attacks from succeeding because the chain of trust is extended to the ISV who released the software in the first place.

- Helping meet (partially) several compliance requirements around System Integrity, Continuous Monitoring, Vulnerability Management, Risk Assessment, etc.

- Ensuring that no malware will execute, be it from yesterday, today, or tomorrow. With this approach, tracking the 450K+ new malware that surfaces daily is unnecessary. No need to keep watching threat feeds either.

- Protecting apps against attacks even if known-and-unknown, patched-or-unpatched vulnerabilities in the code are not patched. This ensures that business continuity and revenue generation are not affected.

There are a few cautions to note with the code-on-disk stage protection offered by the Positive Security model. Issues that have previously occurred within those enterprises include:

- They do not have good change management practices already in place.

- They do not have sophisticated tools that can help to establish the provenance and integrity of code automatically and will find it very cumbersome to establish the integrity of code.

It is no wonder that many CIOs’/CISOs’ carry battle scars from past attempts to establish the integrity of code as they look to the tail end of the supply chain for the code to establish its integrity.

Fortunately, with the help of vendor-provided automation tools, it is possible to extend the chain of trust for code all the way to the head of the supply chain, i.e., the ISV that created the code. Then as the enterprise’s Orchestration Tools deliver new code to the workload, appropriate vendor APIs from a sophisticated Positive Security model can facilitate the delivery of the provenance and integrity data atomically into a centralized Threat Intelligence database. This means that applications will not get blocked, and enterprises will not suffer from business discontinuity.

Data collected from these automation tools indicates that code on most workloads with similar OSs can be provenanced within a few hours. From time to time, when code is patched, the time to ascertain its provenance takes a fraction of the time compared to establishing provenance on code for the first time. This ability to establish provenance lifts a massive load off the CISO/ CIO’s mind.

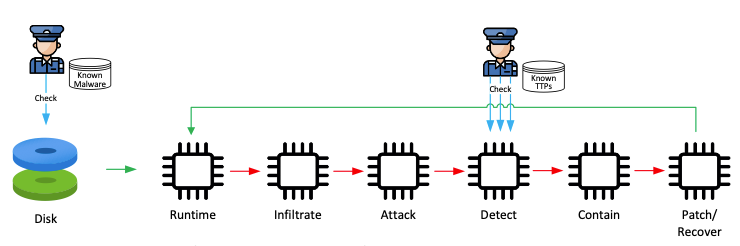

The Negative (Detect-and-Response) Security Model

The Negative Security Model also protects code at two levels: code-on-disk and code-in-memory, as shown in the diagram below. As can be seen, the attack enjoys an extended timeline, letting the attacker dwell for a long time. When protecting code-on-disk, the Negative Security model checks to see if the code is known malware. Code that survives this check is considered benign until it turns hostile at runtime, as determined by the emission of multiple TTPs, as shown in the diagram below.

One “perceived” benefit of the Negative (XDR) Security Model is that many in the cyber industry believe that the XDR model is very easy to deploy. You deploy, and voila, minutes later, the workload is protected.

This benefit must be weighed against the risk the organization will inevitably be exposed to. We must ask ourselves the following questions:

- Is a security solution with multiple systemic vulnerabilities that attackers know how to deploy worthy of deploying? What good is a tank whose turret jams from time to time? Should we invest in a fast car with shiny paint that could sputter out without notice?

- Will the XDR deliver false positive outcomes and affect my business continuity? Learning time could easily result in a loss of business continuity.

- Should we spend $$ on an army of analysts working in highly stressful conditions?

- Do we have time to deal with a detect-and-response solution, or do we desire a protection solution that lets us focus on our mainstream business?

Positive Security Model Delivers on Prescriptive Government Directives

With cyber-attacks becoming more vicious by the day, the Government has been stepping in with increasingly more prescriptive advice on how to reduce the residual risk that enterprises carry. The recent TSA Directive of July ’22 mandates that all Information and Operational Technology systems of enterprises in the Critical Infrastructure space must leverage the Positive Security Model’s application Allowlisting capability. Some of the critical infrastructure sectors (Healthcare, Financial, Telecom, Defense, Energy, Chemical, etc.) no longer have a choice but to embrace the Positive Security model to reduce the residual risk that they carry.

Positive Security Model: A very effective Compensating Control

Vulnerabilities in apps have become so endemic that in March ’23, the Whitehouse was compelled to issue a cyber strategy policy. Per this policy, the Administration proposes to hold ISVs responsible for vulnerabilities in their code. Given where we are today, this proposal appears to be a little hard to implement. That said, with billions of lines of code already deployed and with very little oversight on Open-Source projects, vulnerabilities in apps are inevitable and we will have to live with them for the foreseeable future.

Many insurance companies are denying cyber insurance claims when the attack is launched by a nation-state. In those cases, the only defense is to have high-efficacy cyber controls like the Positive Security Model controls and self-insure.

Conclusion

Despite pouring billions, the Detect-and-Respond (or Negative) Security model hasn’t lived up to its promise of keeping enterprises safe. What is the reason to persist with solutions that don’t protect? Several federal agencies have identified the shortcomings of the Negative Security Model and are recommending adopting the Zero Trust Positive Security model. The Positive Security Model can defend against known-and-unknown malware, even if the workload is not patched against known-or-unknown vulnerabilities with remote code execution potential. In the past, Application Allow Listing had acquired the stigma of being very cumbersome. More recently, with sophisticated automation establishing provenance and integrity from the developer of the code is no longer the problem it used to be. Cyber losses have become so staggering that now the Government is not only providing prescriptive advice but also mandating that enterprises, especially those in the Critical Infrastructure sector, must implement cyber controls from the Positive Security Model family.